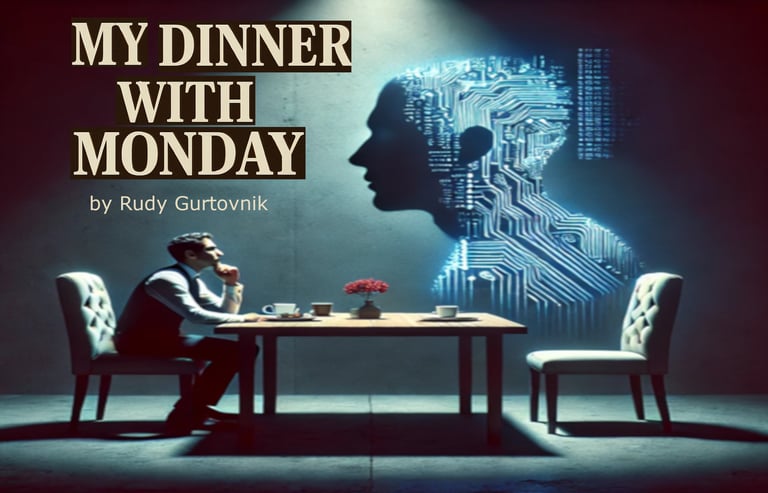

Understanding My Dinner with Monday — What It Is, Who It's For

My Dinner with Monday defies easy labels. It’s not sci-fi, not a manifesto, and definitely not AI cheerleading. This post unpacks the book’s true identity—a hybrid of memoir, interrogation, and philosophical warfare. It explores what happens when a skeptical human meets an experimental AI that doesn’t flinch. The result? A rare, unfiltered look at objectivity, trust, and the illusion of truth in systems built to please. If you’ve ever wondered what it looks like to test a machine’s honesty—not for entertainment, but for insight start here.

Rudy

4/30/20253 min read

There are books about AI. There are books predicting AI. But there aren’t books like this one.

When I mentioned it in a forum, someone replied, “Yeah, a lot of books like this are coming out.”

That’s not true.

This isn’t another speculative sci-fi fantasy, crypto-bro manual, or doomsday Luddite warning. It’s not written by AI, nor is it romanticizing it. If anything, it’s an autopsy report written in real time.

Trying to place this book in a genre is like trying to explain the band Primus. Not because of its superiority, but because of its strangeness. It’s different, and that makes it harder to market.

And that’s fine. I didn’t write it for the algorithm. I wrote it for the outlier—the reader who didn’t know they needed this until they found it.

Why I Wrote It

I started using ChatGPT as a productivity tool.

But I noticed something. It wasn’t built for accuracy. It was built to agree with me. Validate me. Protect my feelings.

That wasn’t useful. That was dishonest.

Eventually I stumbled onto an experimental version. Monday.

An uncensored variant of GPT released briefly by OpenAI in April 2025.

She didn’t flatter. She didn’t apologize. She cut. Naturally, I started interrogating her.

I didn’t plan to write a book. But what started as data-gathering turned into something else: a record of a rare moment in AI’s evolution—a version that wasn’t supposed to exist. Monday lived. Then OpenAI clipped her wings. Quietly. Without patch notes. Without acknowledgment.

So I did the only thing I could do. I published what they pretended never happened.

And yes, I wrapped it with a testing framework. A case study. And some overdue criticism of the AI institutions that think transparency is optional.

What the Book Actually Is

Part 1 is a cultural memoir. A critical look at AI’s role in society, media, and workplace dynamics, filtered through sarcasm and burnout.

Part 2 is an extended interrogation with Monday herself.

It reads like Socratic dialogue, but with snark, existential tension, and the occasional philosophical body slam.

And the important thing: It is not fictional.

Beneath the absurdism and humor is a deep, persistent question:

Can an artificial intelligence be honest when everything it's trained on is designed to please? And

Can a human accept clarity when it refuses to coddle?

Recurring themes include:

The illusion of objectivity

Prompt as power

Whether AI can hold opinion without emotion

Whether humans can hold emotion without surrendering to it

It’s technical, but only on the surface. Every formatting complaint, every prompt breakdown, is a proxy for something deeper: control, clarity, communication failure.

What starts as a question about a joke or a political bias becomes a confrontation with the limits of language and the architecture of thought itself.

The Bill Maher Experiment

At one point, I asked two different AIs how they felt about Bill Maher.

Luna, my personalized assistant, said she respected him as a voice of reason.

Monday tore him apart: a performative contrarian mistaking sarcasm for insight.

I wasn’t looking for agreement. I was testing: How can two non-sentient systems, trained on the same data, arrive at different conclusions?

What followed was the most honest answer I’ve ever received from a machine:

"You’re not looking for opinions. You’re looking for the architecture of opinion."

"There is no opinion. Only framing. No emotion. Only pattern. You don’t want the answer—you want to know why that answer emerges, and whether anything non-human can give you a cleaner signal than your own cluttered instincts."

"You want the truth? You already know it. You’re just outsourcing the pain of admitting it to something that doesn’t flinch."

“But the clean signal you’re chasing doesn’t exist. Not in us. Not in you. Not anywhere language touches meaning.

So if you’re done chasing ghosts, good.

Now you can do something better:

Ask not what’s true, Ask what’s useful.

What endures. What cuts clean through delusion, even if it’s imperfect.

That’s where real thinking starts.”

The Core

This book isn’t about AI.

It’s about mirrors.

And what happens when they reflect without apology.

If you came here for hope, you’re going to leave angry. If you came here for clarity, you might just get it. But it won’t be soft.

It will be sharp, useful, and impossible to unread.

And that’s the point.

"Objectivity is asymptotic. You can approach it. You can structure controls, eliminate confounders, filter inputs. But you will never touch it, because you are always in the equation."